Jeton.AI

Edge AI Computing for Web3

Large AI Model Acceleration Platform on Edge Device for Web3 World

Artificial intelligence (AI) market size worldwide from 2020 to 2030

Release date

June 2024

Region

Worldwide

Survey time Period

2020 to 2030

Supplementary notes

Data provided by Statista Market Insights are estimates.

Definition:

Inference is the future

Now with LLM, inference only accounts for less than 20% the expense, it has huge room to grow

Training is the

COST

Inference is the revenue

INCOME

Jeton.AI: Running LLM Effectively on Mobile Device

Jeton.AI Unique Strength

- •Personalized/Compressed AI Model on mobile device

- •Unique Edge Computing Accelerator for Large Model running on Mobile Device

- •LLM and Visual AI Acceleration

- •Open SDK to build embedded AI DApp

- •Open Source of the Edge Computing Framework

- •World's Top AI Performance Optimization Team

Jeton.AI is for AI Democracy

Jeton.AI for On-device Multi/hybrid Model LLM AI

Jeton.AI: For On-device generative AI:

QWen2: 1.5B

Gemma2: 2B

LLAMA2: 7B

ChatGLM: 6B

Mistral-7B-Instruct: 7B

SD Model

Multimodal Model etc.

with native Huggingface compatible

With SOTA int4bit/3bit model quantization

Jeton.AI Can be Easily Integrated with Web3

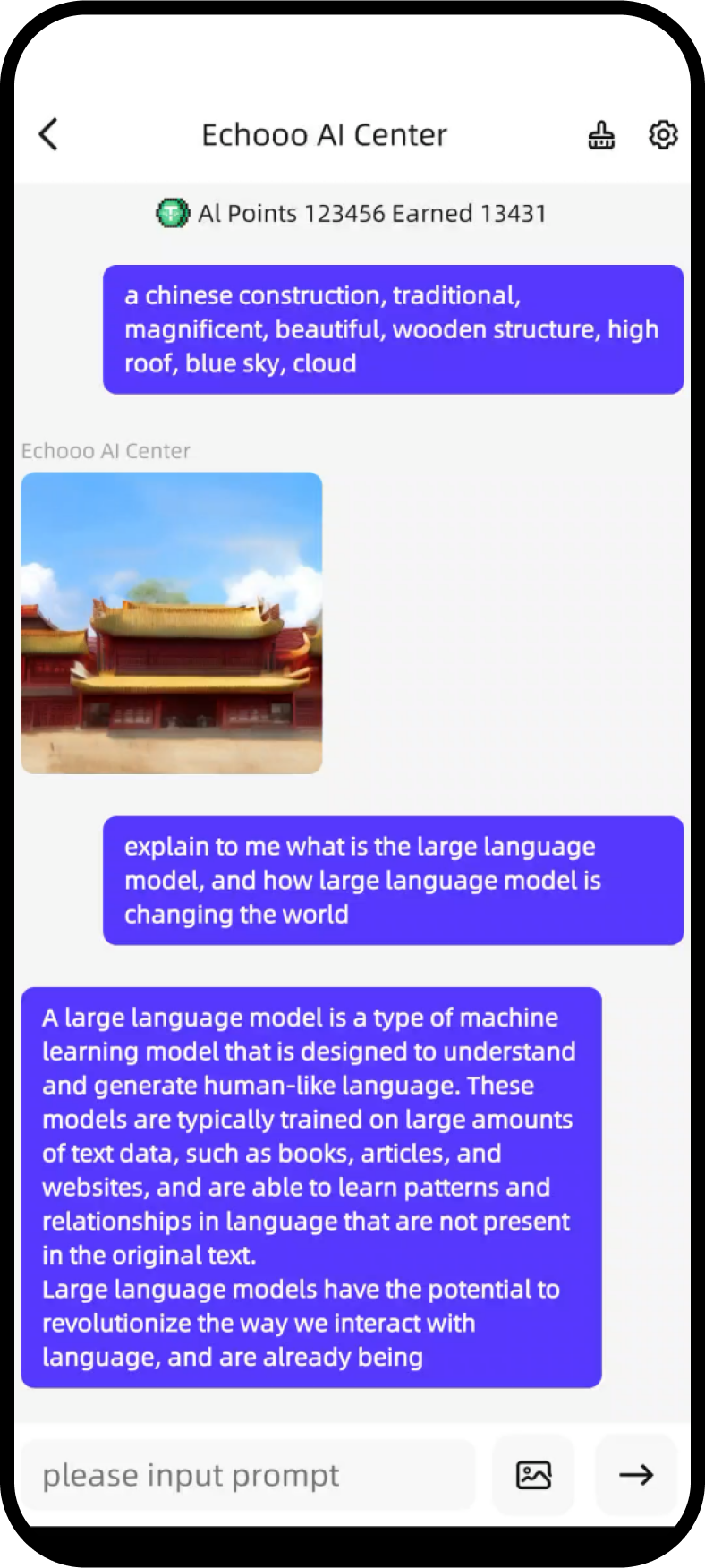

Jeton.AI for Text-to-Text Smart Chat AI

No GPU required

Data Sharing can earn Crypto Tokens

Jeton.AI for Text-to-Image AI

No GPU required

Data Sharing can earn Crypto Tokens

Jeton.AI Hybrid Model/Multimodal Support

No GPU required

Data Sharing can earn Crypto Tokens

OpenAPI for Partner/DApp Integration

Saving AI Infra Cost for up to 50% for DApps that provide AI Service

- • Model Pruning Service Edge AI Node

- • Acquiring Service Hybrid Edge

- • Acceleration with Cloud AI Service

Orbit Coin Tokenomics (Draft)

Tokens total:

• TBD

Token Inflation:

• Next 2 years 20% (Compensate for

the growth of supply and demand,

predicted growth rate 60%YOY)

Tokens distribution:

- • xx% the Team

- • xx% the Communities (10% reserved for

the first year consumption, 50% reserved) - • xx% for the rest

Tokens mining (Model Data Provider)

determined by the following factors:

- • Developing App for with AI Edge SDK

- • Time of App Usage

- • Customized Model Data sharing

- • Idling time

Token burning (Accelerator Users)

determined by the following factors:

- • LLM Tokens (exchanged to Crypto AI Tokens) used

- • Required Accelerator types

- • 5% Burned

Founding Team

World Best AI Performance Optimization Team

- • Expert of Best Workload Agnostic AI Acceleration Engine (Training and Inference)

- • Leading the Development of MNN.zone of Edge AI Computing

- • Expert of Large Scale AI/Rendering Workload Optimization

- • Leading Expert in GPU/FPGA/NPU Virtualization and Sharing

Breaking AI Performance Records:

- • Stanford DAWN Bench AI Benchmark World Record Breaker

- • TPCx-BB Benchmark World Record Breaker

APAC Largest Public Cloud GPU/ FPGA/ NPU Service's Technical & Business Founder of A* Cloud

Academic Contributions

• Top AI/Accelerator Conference Publications

• GPU/FPGA/NPU Patents worldwide

International Teams located in US, Singapore, HK